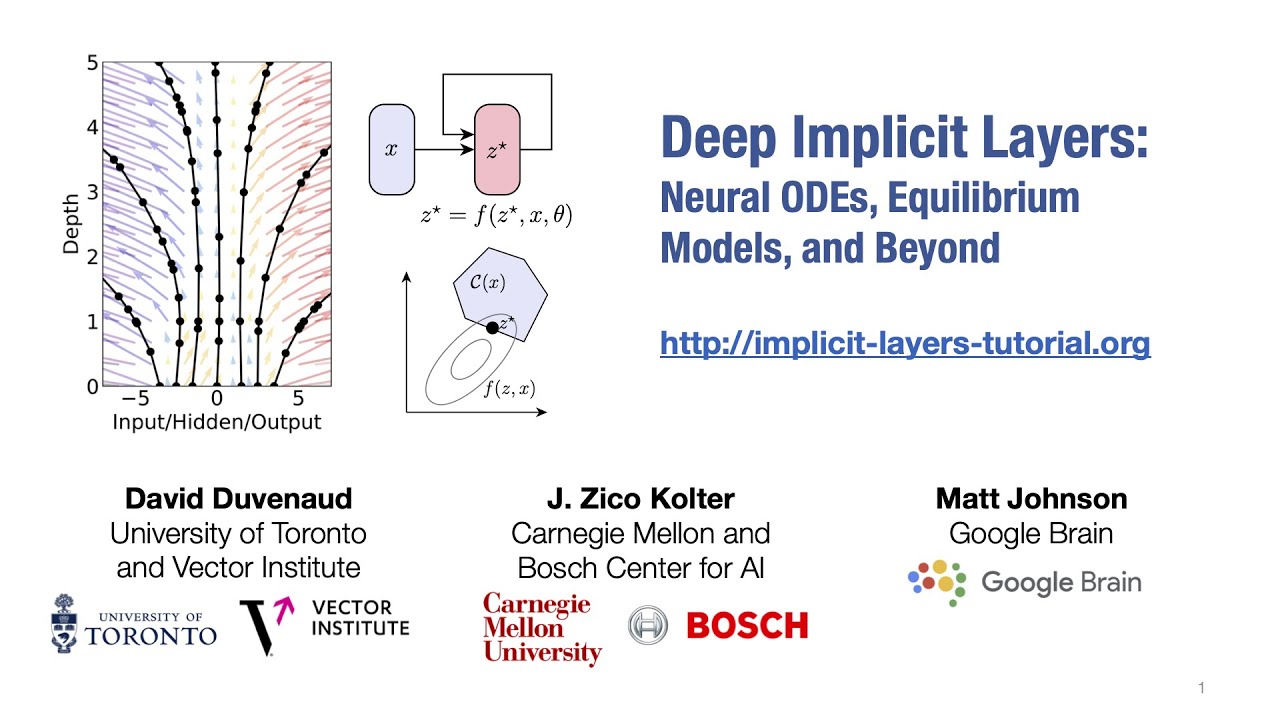

Neurips 2020 Tutorial: Deep Implicit Layers

Unleash Your Creative Genius with MuseMind: Your AI-Powered Content Creation Copilot. Try now! 🚀

In the ever-evolving landscape of deep learning, the quest for more efficient and powerful neural network architectures has led to a rediscovery of implicit layers, specifically Differential Equation Layers (DEQs). These intriguing layers offer a fresh perspective on tackling complex problems in the realm of machine learning. But before we delve deeper into the world of DEQs, let's take a moment to reflect on the fascinating journey that has brought us here.

A Brief History of Implicit Layers

In 1987, the world of machine learning was already experimenting with classes using differential equations and fixed-point equations. However, these classes fell out of favor and were replaced by more explicit network structures. Fast forward to recent times, and we witness a rekindled interest in these ideas, fueled by modern tools and techniques in network architecture and automatic differentiation.

Researchers have revisited the concept of using implicit layers, such as Runge-Kutta integration for solving differential equations, with applications ranging from creating fascinating carbon monoxide crystals. The resurgence of interest in these implicit layers can be attributed to their differentiability and adaptability, making them ideal candidates for optimizing various tasks.

Exploring the Boundaries of Optimizability

As we entered the late 2000s, numerous research groups embarked on the journey of working with diverse variants of optimization problems. Some even ventured into the realm of automatically transforming layers into non-convex optimization problems, opening up new horizons for exploration.

One fascinating domain that has rekindled interest in implicit layers is optimizing the potential for differentiation. It's an era where we no longer limit ourselves to one-size-fits-all solutions but rather seek to differentiate our approach to problem-solving. Imagine a world where every layer of your neural network adapts dynamically to the task at hand. It's like having a toolkit filled with specialized instruments for every musical note.

DEQs: Solving Equilibrium Problems with Grace

Differential Equation Layers, or DEQs, have emerged as a formidable tool in the deep learning arsenal. These layers have the power to tackle equilibrium problems with unmatched efficiency. The beauty of DEQs lies in their ability to be represented by a single layer, making them a cost-effective choice.

Recent advancements have addressed some of the outstanding questions surrounding DEQs. These include the guaranteed existence and uniqueness of equilibrium points and their stability. The quest for answers has borne fruit, as we now possess a clearer understanding of the capabilities and limitations of DEQs.

Unleashing the Power of DEQs

DEQs have flexed their muscles in the domain of deep learning, showcasing remarkable performance across various tasks. In the realm of language modeling, DEQ variants of transformer models and Trellis Net models have outshone their peers. These models achieve superior results while consuming less memory, a testament to their efficiency and prowess.

However, applying DEQ models to vision-related tasks posed challenges, particularly when multiple feature resolutions were necessary. To surmount this hurdle, a multi-scale extension of DEQ models entered the scene. This extension enables the simultaneous representation of multiple spatial scales of features, a breakthrough that fuels progress in image classification and semantic segmentation.

Implicit Layers in Action

Incorporating implicit layers, such as DEQs, within a single layer of deep learning models has proven to be a game-changer. These models tackle complex tasks with finesse and deliver results that rival existing state-of-the-art models. From image classification on the ImageNet dataset to semantic segmentation on the cityscapes dataset, the multi-scale DEQ model stands tall and competes fiercely.

In conclusion, the resurgence of implicit layers in deep learning, embodied by DEQs, marks an exciting chapter in the field. As we venture further into the uncharted territories of neural networks, the future holds promises of even greater efficiency, adaptability, and ingenuity. The era of implicit layers has arrived, and it's here to stay, transforming the way we approach complex problems in the world of machine learning.

Let's embrace this new era with open arms, for the journey of discovery has only just begun. The possibilities are as infinite as the universe itself, waiting to be unlocked by the brilliance of human creativity and the power of deep learning.